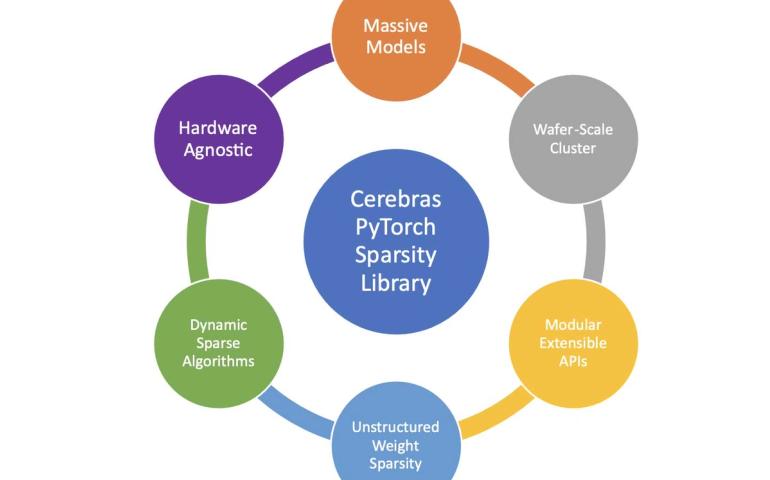

Sparsity Made Easy – Introducing the Cerebras PyTorch Sparsity Library - Cerebras

February 05, 2024

Blog

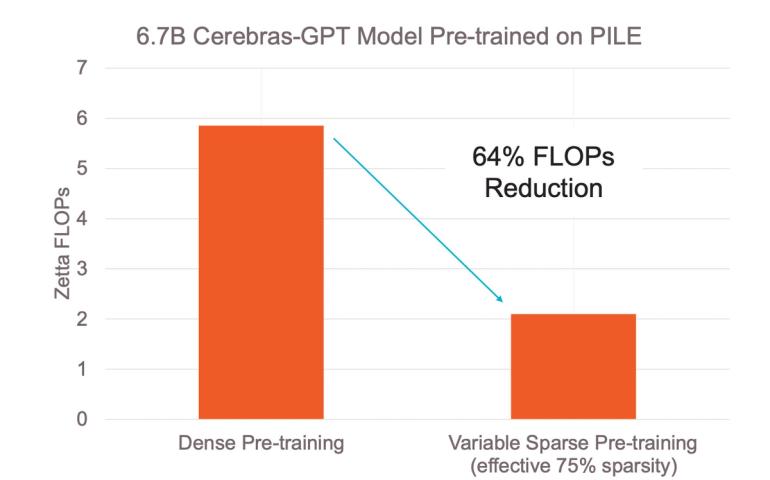

Accelerating Large Language Model Training with Variable Sparse Pre-training and Dense Fine-tuning - Cerebras

July 22, 2023

Blog

Efficient Large-Scale GPT Training Using a Cerebras Wafer-Scale Cluster - Cerebras

May 23, 2023

Blog