Don't be lazy: CompleteP enables compute-efficient deep transformers

May 02, 2025

Publication

The Practitioner’s Guide to the Maximal Update Parameterization - Cerebras

September 23, 2024

Blog

BTLM-3B-8K: 7B Performance in a 3 Billion Parameter Model

July 24, 2023

Blog

BTLM-3B-8K: 7B Performance in a 3 Billion Parameter Model - Cerebras

July 24, 2023

Blog

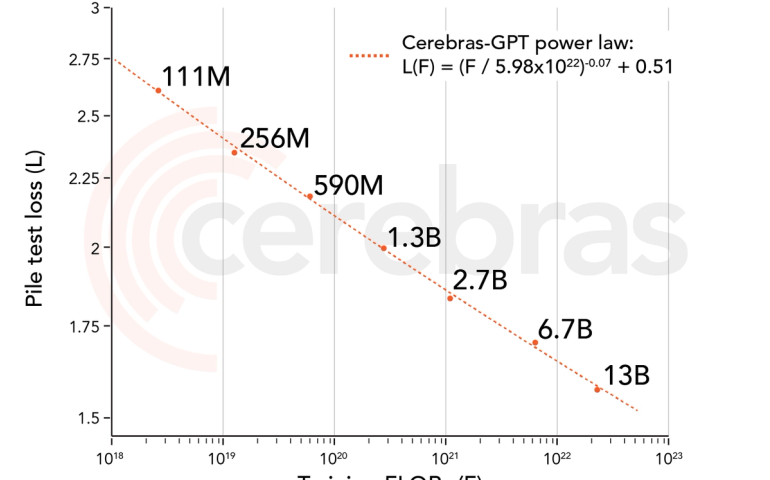

Cerebras-GPT: A Family of Open, Compute-efficient, Large Language Models - Cerebras

March 28, 2023

Blog