Cerebras and G42 Break Ground on Condor Galaxy 3, an 8 exaFLOPs AI Supercomputer

Featuring 64 Cerebras CS-3 Systems, Condor Galaxy 3 Doubles Performance at Same Power and Cost

Cerebras Systems Unveils World’s Fastest AI Chip with Whopping 4 Trillion Transistors

Third Generation 5nm Wafer Scale Engine (WSE-3) Powers Industry’s Most Scalable AI Supercomputers, Up To 256 exaFLOPs via 2048 Nodes

Cerebras Selects Qualcomm to Deliver Unprecedented Performance in AI Inference

Best-in-class solution developed with Qualcomm® Cloud AI 100 Ultra offers up to 10x number of tokens per dollar, radically lowering operating costs of AI deployment

Cerebras, 23’s Most Successful AI Startup, Sees A Bright Future

With revenue and customer commitments approaching $1B, Cerebras’ Wafer Scale Engine has likely generated more business than all other startup players combined. Needless to say, CEO Andrew Feldman is feeling pretty bullish about 2024.

Cerebras Systems Hires Industry Luminary Julie Shin Choi as Senior Vice President and Chief Marketing Officer

Cerebras Systems, the pioneer in accelerating generative AI, today announced that it has hired industry expert Julie Shin Choi as its Senior Vice President and Chief Marketing Officer. In this role, she will oversee global brand and communications, community engagement, demand generation and all go-to-market activities. Choi will also join the Cerebras executive team where she will help accelerate the growth and adoption of Cerebras technology into existing markets, as well as new ones.

Cerebras Systems Announces 130x Performance Improvement on Key Nuclear Energy Simulation over Nvidia A100 GPUs

Continuous Energy Monte Carlo Particle Transport Kernel Outperforms Highly Optimized GPU Version, Unlocking New Potential in Fission and Fusion Reactor Simulations

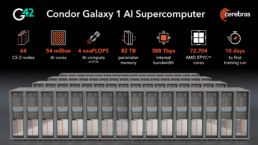

Cerebras and G42 Complete 4 exaFLOP AI Supercomputer and Start the March Towards 8 exaFLOPs

Condor Galaxy takes flight towards the goal of nine interconnected supercomputers with 36 exaFLOPs of AI compute capacity

Core42 Sets New Benchmark for Arabic Large Language Models with the Release of Jais 30B

Latest Jais model iteration shows stronger performance across content generation, summarization, Arabic-English translation

Cerebras’ dinner plate-sized chip challenges Nvidia GPU

Historically, a single wafer has been split into thousands of smaller chips.

Cerebras Systems Selects VAST Data to Accelerate the Next Wave of Data Intensive Generative AI Workloads

VAST’s scalable, secure central data foundation is already powering one of the world’s largest AI clouds, a multi-hundred petabyte AI lake at Core42

M42 Announces New Clinical LLM to Transform the Future of AI in Healthcare

M42, a global tech-enabled healthcare network, has unveiled an impactful advancement in healthcare technology with the launch of Med42, a new open-access Clinical Large Language Model (LLM). The 70 billion parameter, generative artificial intelligence (AI) model is poised to transform the future of AI across the healthcare sector and create a direct impact on patient care outcomes.

KAUST and Cerebras Named Gordon Bell Award Finalist for Solving Multi-Dimensional Seismic Processing at Record-Breaking Speeds

Run on Condor Galaxy 1 AI Supercomputer, seismic processing workloads achieve application-worthy accuracy at unparalleled memory bandwidth, enabling a new generation of seismic algorithms

Cerebras Systems Promotes Dhiraj Mallick to Chief Operating Officer

Cerebras Systems, the pioneer in accelerating generative AI, today announced it has promoted Dhiraj Mallick to serve as the company’s chief operating officer (COO).

Meet “Jais”, The World’s Most Advanced Arabic Large Language Model Open Sourced by G42’s Inception

Developed in partnership with MBZUAI, Jais was trained on the Condor Galaxy 1 AI supercomputer on 116 billion Arabic tokens and 279 billion English tokens of data

In G42, Cerebras Finds The Deep Pockets And Partnership It Needs To Grow – The Next Platform

When you are competing against the hyperscalers and cloud builders in the AI revolution, you need backers as well as customers that have deep-pockets and that can not only think big, but pay big. Cerebras Systems is in just such a position as it tries to push its well-regarded CS-2 wafter-scale matrix math engines against GPUs from Nvidia and AMD, TPUs from Google, and a seemingly endless number of upstarts.

AI startup Cerebras built a gargantuan AI computer for Abu Dhabi’s G42 with 27 million AI ‘cores’

Condor Galaxy is the first stage in a partnership between the two firms expected to ultimately lead to hundreds of millions of dollars for a clustered system spanning multiple continents.

Here comes the world’s fastest AI supercomputer, thanks to the UAE’s G42

The world's faster supercomputer built for generative AI projects is more than 20 times faster than the previous one.

100M USD Cerebras AI Cluster Makes it the Post-Legacy Silicon AI Winner

Cerebras is innovating on more than just its chips, it is also the first major AI chipmaker to deploy and sell its AI accelerators in a cloud that it operates at this scale.

Cerebras Sells $100 Million AI Supercomputer, Plans Eight More

G42 and Cerebras have partnered to build a significant AI supercomputer, Condor Galaxy 1, to be built this year in nearby Santa Clara.

Cerebras unveils world’s largest AI training supercomputer with 54M cores

The network of nine interconnected supercomputers promises to reduce AI model training time significantly.

An A.I. Supercomputer Whirs to Life, Powered by Giant Computer Chips

The new supercomputer, made by the Silicon Valley start-up Cerebras, was unveiled as the AI boom drives demand for chips and computing power.

Cerebras Lands Huge AI Deal: A 36 Exaflop Monster

The Wafer-Scale Engine company has been working for 8 years to break into the top tier of AI computing. This deal might just assure its success.

Cerebras and G42 Unveil World’s Largest Supercomputer for AI Training with 4 exaFLOPs to Fuel a New Era of Innovation

Launching today with its first of nine interconnected AI supercomputers, the Condor Galaxy system will reach a combined AI training capacity of 36 exaFLOPs

Huge chips. Big AI advantage.

Andrew Feldman is making the world's largest semiconductor chip. And he's hitting the market at the right time.

Understanding the impact of open-source language models

In a recent interview with TechTalks, Andrew Feldman, CEO of Cerebras Systems, discussed the implications of closed models and the efforts to create open-source LLMs.

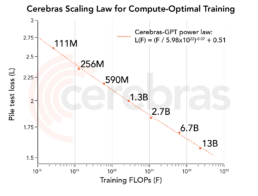

Cerebras’ Open-Source GPT Models Trained at Wafer Scale

Cerebras has open-sourced seven trained GPT-class large language models (LLMs), ranging in size from 111 million to 13 billion parameters, for use in research or commercial projects without royalties, Cerebras CEO Andrew Feldman told EE Times. The models were trained in a matter of weeks on Cerebras CS-2 wafer-scale systems in its Andromeda AI supercomputer.

AI pioneer Cerebras opens up generative AI where OpenAI goes dark

The AI computer maker released seven open-source models like OpenAI's GPT as a resource to the research community.

Cerebras Smashes AI Wide Open, Countering Hypocrites

We could have a long, thoughtful, and important conversation about the way AI is transforming the world. But that is not what this story is about. What it is about is how very few companies have access to the raw AI models that are transforming the world, the curated datasets that have been purged of bias (to one degree or another) that are fundamental to training AI systems.

AI computing startup Cerebras releases open source ChatGPT-like models

Artificial intelligence chip startup Cerebras Systems on Tuesday said it released open source ChatGPT-like models for the research and business community to use for free in an effort to foster more collaboration.

Cerebras Publishes 7 Trained Generative AI Models To Open Source

The AI company is the first to use Non-GPU tech to train GPT-based Large Language Models and make available to the AI community.

Cost-effective Fork of GPT-3 Released to Scientists

Cerebras is releasing open-source learning models for researchers with the ingredients necessary to cook up their own ChatGPT-AI applications.

Cerebras Systems Releases Seven New GPT Models Trained on CS-2 Wafer-Scale Systems

Cerebras-GPT Models Set Benchmark for Training Accuracy, Efficiency, and Openness.

Researchers Can Get Free Access to World’s Largest Chip Supporting GPT-3

The Pittsburgh Supercomputing Center wants to provide free access to the world’s largest chip for researchers to run transformer models like GPT-3.

In the Whirl of ChatGPT, Startups See an Opening for Their AI Chips

As major chip players ... rush to capitalize on the popularity of generative artificial intelligence, startups are seeing their chance to grab a bigger piece of that pie as well. Cerebras Systems Inc., a Sunnyvale, Calif.-based chip company founded in 2016, has been able to capitalize on some of that interest.

A Digital Twin for Intense Weather Gives Scientists a ‘Control Loop’

Using a Cerebras CS-2 computer, scientists are able to simulate large computational fluid dynamics experiments in near-real time, which they say may have dramatic implications for energy and climate change projects.

Decarbonization Initiative at NETL Gets Computing Boost

A major initiative by U.S. president Joe Biden called EarthShots to decarbonize the power grid by 2035 and the U.S. economy by 2050 is getting a major boost through a computing breakthrough at the National Energy Technology Laboratory. The performance gains are coming on a system with chips from Cerebras Systems.

National Energy Technology Laboratory and Pittsburgh Supercomputing Center Pioneer First Ever Computational Fluid Dynamics Simulation on Cerebras Wafer-Scale Engine

Running on Cerebras CS-2 within PSC’s Neocortex, NETL Simulates Natural Convection with Multi-Hundred Million Cell Resolutions, Pointing the Way to More Powerful, Energy Efficient and Insightful Scientific Computing

CFD Could Be the Engine that Drives Waferscale Mainstream

The next decade might demonstrate waferscale as one of only a few bridges across the post-Moore’s Law divide, at least for some applications. Luckily for the only maker of such a system, Cerebras, those areas are among the most high-value workloads in large industry.

Green AI Cloud and Cerebras Systems Bring Industry-Leading AI Performance and Sustainability to Europe

As the Only European Provider of Cerebras Cloud, Green AI Cloud Delivers AI Super Compute in an Easy-to-Use Solution for AI and ML Applications, Data Science and Simulation Workloads

Cerebras Announces Pay-As-You-Go AI Training On Cirrascale Cloud

Cerebras is not your typical AI chip company. And that's a good thing. We already have a lot of knock-offs taking on NVIDIA, and they all seem to do a good job of knocking only themselves out. Now Cerebras has announced new cloud access on Cirrascale with a fixed-price schedule to train foundation models on its hardware.

Cerebras Systems and Cirrascale Cloud Services® Introduce Cerebras AI Model Studio to Train GPT-Class Models with 8x Faster Time to Accuracy, at Half the Price of Traditional Cloud Providers

With Predictable Fixed Pricing, Faster Time to Solution, and Unprecedented Flexibility and Ease of Use, Customers Can Train GPU-Impossible Sequence Lengths and Keep Trained Weights

Cerebras Systems and Jasper Partner on Pioneering Generative AI Work

As one of the first customers to leverage Cerebras’ new Andromeda AI supercomputer to design next set of customer-specific models, Jasper expects to vastly improve the accuracy of generative AI models

New Cerebras Wafer-Scale ‘Andromeda’ Supercomputer Has 13.5 Million Cores

Cerebras unveiled its new AI supercomputer Andromeda at SC22. With 13.5 million cores across 16 Cerebras CS-2 systems, Andromeda boasts an exaflop of AI compute and 120 petaflops of dense compute. Its computing workhorse is Cerebras’ wafer-scale, manycore processor, WSE-2.

ACM Gordon Bell Special Prize for HPC-Based COVID-19 Research Awarded to Team for Modelling How Pandemic-Causing Viruses, Especially SARS-CoV-2, are Identified and Classified

ACM, the Association for Computing Machinery, awarded the 2022 ACM Gordon Bell Special Prize for High Performance Computing-Based COVID-19 Research to an international team for their project “GenSLMs: Genome-Scale Language Models Reveal SARS-CoV-2 Evolutionary Dynamics.” The Prize recognizes outstanding research achievement toward the understanding of the COVID-19 pandemic through the use of high-performance computing.

HPCwire Reveals Winners of the 2022 Readers’ and Editors’ Choice Awards During SC22

HPCwire, the leading publication for news and information for the high performance computing industry, announced the winners of the 2022 HPCwire Readers’ and Editors’ Choice Awards at the Supercomputing Conference (SC22) taking place this week in Dallas, Texas. Tom Tabor, CEO of Tabor Communications, unveiled the list of 137 winners across 21 categories just before the opening gala reception.

Gordon Bell Nominee Used LLMs, HPC, Cerebras CS-2 to Predict Covid Variants

The research leveraged several major supercomputers, as well as a major AI-focused system from Cerebras Systems, applying LLMs to transform how new and emergent variants of viruses like SARS-CoV-2 are identified and classified. (Subsequently, this work won the Gordon Bell Special Prize for HPC-based COVID-19 research.)

Cerebras Wants Its Piece Of An Increasingly Heterogeneous HPC World

We live in a world that has become accustomed to cloud computing, and now it is perfectly acceptable to do timesharing on such machines to test ideas out. This is precisely what Cerebras is doing as it stands up a 13.5 million core AI supercomputer nicknamed “Andromeda” in a colocation facility run by Colovore in Santa Clara, the very heart of Silicon Valley.

Hungry for AI? New supercomputer contains 16 dinner-plate-size chips

Exascale Cerebras Andromeda cluster packs more cores than 1,954 Nvidia A100 GPUs.

Silicon Valley chip startup Cerebras unveils AI supercomputer

Silicon Valley startup Cerebras Systems, known in the industry for its dinner plate-sized chip made for artificial intelligence work, on Monday unveiled its AI supercomputer called Andromeda, which is now available for commercial and academic research.

Cerebras Unveils Andromeda, a 13.5 Million Core AI Supercomputer that Delivers Near-Perfect Linear Scaling for Large Language Models

Delivering more than 1 Exaflop of AI compute and 120 Petaflops of dense compute, Andromeda is one of the largest AI supercomputers ever built, and is dead simple to use

Cerebras Systems and National Energy Technology Laboratory Set New Milestones for High-Performance, Energy-Efficient Field Equation Modeling Using Simple Python Interface

The Cerebras CS-2 and its Wafer-Scale Engine Outperforms Leading Supercomputer by Over Two Orders of Magnitude in Time to Solution, Delivering CPU and GPU-Impossible Performance

Waferscale, meet atomic scale: Uncle Sam to test Cerebras chips in nuke weapon sims

Thermonuclear warheads, so hot right now

Sandia awards Advanced Memory Technology R&D contract to Cerebras Systems

Investigating stockpile stewardship applications for world’s largest computer chip

Cerebras Systems visit report

Beyond the Wafer Scale Engine, their challenge doesn't end with just making the biggest chip ever

Tesla Dojo AI Supercomputer Deep Dive and Analysis

Comparison to Cerebras

Wisdom From The Women Leading The AI Industry, With Lakshmi Ramachandran of Cerebras Systems

Beyond the Wafer Scale Engine, their challenge doesn't end with just making the biggest chip ever

Andrew Feldman: Cerebras and AI Hardware

A conversation with Andrew Feldman, co-founder and CEO of Cerebras Systems.

New Cerebras Wafer-Scale Cluster Eliminates Months Of Painstaking Work To Build Massive Intelligence

The hottest trend in AI is the emergence of massive models such as Open AI’s GPT-3. These models are surprising even its developers with capabilities that some claim approach human sentience. Our analyst, Alberto Romero, shows the capabilities of these models in the image below on the right, which he created with a simple prompt using Midjourney, an AI similar to Open AI’s DALL·E. Large Language Models will continue to evolve to become powerful tools in businesses from Pharmaceuticals to Finance, but first they need to become easier and more cost-effective to create.

Cerebras Proposes AI Megacluster with Billions of AI Compute Cores

Chipmaker Cerebras is patching its chips – already considered the world’s largest – to create what could be the largest-ever computing cluster for AI computing.

チップが大きいことは良いことなんです – 世界最大級のAIアクセラレータ

The Cerebras Wafer-Scale Cluster delivers near-perfect linear scaling across hundreds of millions of AI-optimized compute cores while avoiding the pain of the distributed compute.

Radar Trends to Watch: September 2022

Cerebras, the company that released a gigantic (850,000 core) processor, claims their chip will democratize the hardware needed to train and run very large language models by eliminating the need to distribute computation across thousands of smaller GPUs.

So baut Cerebras seinen Wafer-großen Chip

Cerebras' Wafer Scale Engine beeindruckt nicht nur mit der Größe, sondern auch mit der Architektur. Der Chip berechnet 7,5 Petaflops.

Cerebras Systems CS-2 for Long-Read Sequences

Cerebras Systems said that its CS-2 high-performance computing accelerator is now capable of training artificial intelligence models on 20X longer sequences than "traditonal" hardware, including graphics processing units. The company expects this new capability to lead to advances in natural language processing in drug discovery, particularly by allowing researchers to examine individual genes in the context of many thousands of surrounding genes.

AI Leaders Podcast #27: Transformers, Massive Models and the future of AI

Jean-luc Chatelain, Applied Intelligence CTO, talks with Andrew Feldman, Founder and CEO of Cerebras Systems about transformers, massive models and the future of AI. They discuss how we are moving from a world of a large amount of models to fewer more powerful models known as transformers. Hear what they think this means for the future of AI.

Animal Speech, AI Pilot, Wrongful Arrest, Redesigning Streets

Our 106th episode with a summary and discussion of last week's big AI news! Apologies for a lack of consistent episode releases lately, we've been really busy...

Cerebras Wafer Scale Engine WSE-2 and CS-2 at Hot Chips 34

It’s that time of year again—Hot Chips will soon be upon us. Taking place as a virtual event on August 21–23, the conference will once again present the very latest in microprocessor architectures and system innovations.

The Future Is Now: Cindy Orozco Bohorquez Of Cerebras Systems On How Their Technological Innovation Will Shake Up The Tech Scene

In the professional side, it’s very easy to underestimate the power of communication. In my field, when you interact with executives, customers, users…you need to be able to translate and present your ideas and the key points to any audience. It’s very valuable for people — especially those of us in the technical field or mathematics — to understand and execute the power of communication.

Here Comes Hot Chips!

It’s that time of year again—Hot Chips will soon be upon us. Taking place as a virtual event on August 21–23, the conference will once again present the very latest in microprocessor architectures and system innovations.

New Uses For AI In Chips

Artificial intelligence is being deployed across a number of new applications, from improving performance and reducing power in a wide range of end devices to spotting irregularities in data movement for security reasons.

Teach Yourself About Hot Chips: 2022 Preview

We talk a lot about chips on this channel, so it stands to reason that there's a conference about the hottest chips around! Introducing Hot Chips 2022, this year's place-to-be about silicon.

AI企業セレブラスの世界最大プロセッサーが博物館に–CEOらに聞くこれまでと今後

人工知能(AI)スタートアップのCerebras Systemsは米国時間8月3日、米カリフォルニア州マウンテンビューにあるコンピューター歴史博物館で行われたセレモニーで、その技術の伝統を担っていることを認められた。同博物館は、Cerebrasが開発した史上最大のコンピューターチップである同社の第2世代AIチップ、「Wafer-Scale Engine 2(WSE-2)」の展示を開始した。このチップは2021年に発表されたもので、同社の新型スーパーコンピューター「CS-2」に搭載されている。

Cerebras, le spécialiste de l’IA, remporte un succès dans le domaine des puces

Dans le secteur de la technologie, chaque invention s'appuie sur les succès et les échecs qui l'ont précédée.

Début août, la start-up d'IA Cerebras Systems a été récompensée pour avoir perpétué cette tradition lors d'une cérémonie au Computer History Museum de Mountain View, en Californie.

Last Week in AI #179: AI to save threatened species, ace university math, talk to animals, and more!

How machine learning could help save threatened species from extinction, new algorithm aces university math course questions, a 175B parameter publicly available chatbot

Größer, besser – aber auch schlauer?

Der wohl größte Computerchip der Welt könnte bald ein künstliches Gehirn simulieren, das quantitativ an das menschliche heranreicht. Warum es dennoch kaum wie ein Mensch denken wird.

A.I. Is Not Sentient. Why Do People Say It Is?

The problem is that the people closest to the technology — the people explaining it to the public — live with one foot in the future. They sometimes see what they believe will happen as much as they see what is happening now.

“There are lots of dudes in our industry who struggle to tell the difference between science fiction and real life,” said Andrew Feldman, chief executive and founder of Cerebras, a company building massive computer chips that can help accelerate the progress of A.I.

13 hot chip and semiconductor startups investors are betting on to fill supply-chain gaps and compete with giants like Intel and Nvidia

To get a better sense of why VCs are betting on chip startups, Insider compiled a list of several startups investors say are bringing new technological strides to the field. The firms range from chips designed for artificial intelligence and machine learning to semiconductors embedded in clothes. The firms on this list were picked in consult with VCs and analysts. They were chosen for their funding, leadership, and other criteria to help determine which will stick around.

THE BIGGEST CHIP IN THE WORLD

The silicon chip, or integrated circuit (IC), is one of humankind’s most magnificent, complex, and transformative creations.

Computer History Museum Honors Cerebras Systems – Watch a Replay of the Event

When Cerebras Systems had its coming out at Hot Chips in August 2019, the hardware community wasn’t sure what to think. Attendees were understandably skeptical of the novel “wafer-scale” technology, not to mention an estimated power envelope of ~15 kilowatts for the chip alone. In the intervening three years, the company – under the direction of founder and CEO Andrew Feldman – has won over early critics through a series of impressive milestones.

AI startup Cerebras celebrated for chip triumph where others tried and failed

Company honored by Computer History Museum for cracking the code of making giant chips, with 'stunning' implications.

Die LRZ-Zukunft steht auf Exascale-, KI- und Quantencomputing

Am 14. Juli hat das Leibniz-Rechenzentrum (LRZ) der Bayerischen Akademie der Wissenschaften sein 60jähriges Bestehen gefeiert. Das Hochleistungsrechenzentrum, das unter anderem den Supercomputer „SuperMUC“ beherbergt ist der IT-Dienstleister der Münchner Universitäten und bayerischen Hochschulen sowie Kooperationspartner wissenschaftlicher Einrichtungen in Bayern, Deutschland und Europa. Doch Alter hat in der IT nur bedingt Wert. Wie geht es weiter?

The Microchip Era Is Giving Way to the Megachip Age

To continue making our gadgets more powerful, engineers have worked out a new way to get around the barriers to making microchips faster: Just make them bigger.

Democratizing the hardware side of large language models

There is growing interest in democratizing large language models and making them available to a broader audience. However, the hardware barriers of large language models have gone mostly unaddressed. This is one of the problems that Cerebras, a startup that specializes in AI hardware, aims to solve with its Wafer-Scale processor. In an interview with TechTalks, Cerebras CEO Andrew Feldman discussed the hardware challenges of LLMs and his company’s vision to reduce the costs and complexity of training and running large neural networks.

NETL Researchers Work to Unlock Potential of Artificial Intelligence in Climate Modeling

Researchers at the U.S. National Energy Technology Laboratory (NETL) are helping the National Center for Atmospheric Research (NCAR) unlock the potential of an advanced artificial intelligence (AI) computing resource to perform critical climate modeling that could lead to better climate change predictions.

Meet the nominees for the 2022 VentureBeat Women in AI Awards!

Two Cerebras Systems engineers are finalists for VentureBeat's Women in AI awards!

Age Checks, Theft Prevention, Minecraft AI, Autism, Responsible AI

Discussion of last week's big AI news -- including Cerebras Systems Sets Record for Largest AI Models Ever Trained on a Single Device!

Cerebras trains 20 billion parameter AI model on a single system, sets new record

US semiconductor startup Cerebras claims that it has trained the largest AI model on a single device. The company trained AI models with 20 billion parameters on its Wafer Scale Engine 2 (WSE-2) chip, the world's largest chip.

Training a 20–Billion Parameter AI Model on a Single Processor

Cerebras has shown off the capabilities of its second–generation wafer–scale engine, announcing it has set the record for the largest AI model ever trained on a single device.

For the first time, a natural language processing network with 20 billion parameters, GPT–NeoX 20B, was trained on a single device. Here’s why that matters.

Cerebras breaks record for largest AI models trained on a single device

Cerebras said it can reduce the engineering time to run large NLP models from months to minutes, making it more cost-effective and accessible.

Why The Cerebras CS-2 Machine is a Big Deal

In my first video talking about AI Hardware on this channel, I talk in detail about an exciting Cerebras CS-2 announcement!

Cerebras Slays GPUs, Breaks Record for Largest AI Models Trained on a Single Device

Democratizing large AI Models without HPC scaling requirements.

Cerebras Systems Thinks Forward on AI Chips as it Claims Performance Win

Cerebras Systems makes the largest chip in the world, but is already thinking about its upcoming AI chips as learning models continue to grow at breakneck speed.

Cerebras Systems sets record for largest AI models ever trained on one device

Cerebras Systems said it has set the record for the largest AI models ever trained on a single device, which in this case is a giant silicon wafer with hundreds of thousands of cores.

Cerebras just built a big chip that could democratize AI

Chip startup Cerebras has developed a foot-wide piece of silicon, compared to average chips measured in millimeters, that makes training AI cheap, and easy.

#77 – VITALIY CHILEY (Cerebras)

Cerebras engineer Vitaliy Chiley joins Machine Learning Street Talk to discuss deep learning, sparsity and different compute architectures for artificial intelligence.

Cerebras HPC Acceleration ISC 2022

At ISC 2022 I had the opportunity to sit down with Andy Hock at Cerebras. This was very cool after I told Cerebras and HPE that STH would not cover the HPE Superdome plus one Cerebras CS-2 being installed at LRZ for ISC, Andy still agreed to meet. As a result, we get a little article about the experience. This was one of the more interesting conversations since we veered fairly far from the Cerebras product. Instead, what I wanted to do was just give a perspective, reinforced by this discussion, on what Cerebras is doing to differentiate itself against a huge competitor (NVIDIA.)

Eine neue Maschine für KI und HPC

Die Anforderungen an die Hardware steigen in KI und beim Hochleistungsrechnen stetig. Die Antwort darauf sind neue Ansätze bei Chip- und Rechnerdesign. Eine neuartige Kombi-Architektur wird jetzt das Leibniz-Rechenzentrum (LRZ) in Garching implementieren.

NCSA Deploys Cerebras CS-2 in New HOLL-I Supercomputer for Large-Scale AI

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, today announced that the National Center for Supercomputing Applications (NCSA) has deployed the Cerebras CS-2 system in their HOLL-I supercomputer.

Leading Supercomputer Sites Choose Cerebras for AI Acceleration

Cerebras Systems, the pioneer in high performance artificial intelligence (AI) computing, shared news about their many supercomputing partners including European Parallel Computing Center (EPCC), Leibniz Supercomputing Centre (LRZ), Lawrence Livermore National Laboratory, Argonne National Laboratory (ANL), the National Center for Supercomputing Applications (NCSA), and the Pittsburgh Supercomputing Center (PSC).

LRZ Adds Mega AI System as It Stacks up on Future Computing Systems

A European supercomputing hub near Munich, called the Leibniz Supercomputing Centre, is deploying Cerebras Systems’ CS-2 AI system as part of an internal initiative called Future Computing to assess alternative computing technologies to inject more speed into the region’s scientific research.

HPE, Cerebras build AI supercomputer for scientific research

Wafer madness hits the LRZ in HPE Superdome supercomputer wrapper

München verbaut riesigen KI-Chip

Als erster Standort in Europa hat das Leibniz-Rechenzentrum (LRZ) ein CS-2-System mit Cerebras' WSE-2 gekauft, welches effizient und schnell ist.

Leibniz Supercomputing Centre to deploy HPE-Cerebras supercomputer

The Leibniz Supercomputing Centre (LRZ) in Germany plans to deploy a new supercomputer featuring the HPE Superdome Flex server and the Cerebras CS-2 system.

HPE is building a rapid AI supercomputer powered by the world’s largest CPU

Hewlett Packard Enterprise (HPE) has announced it is building a powerful new AI supercomputer in collaboration with Cerebras Systems, maker of the world’s largest chip.

Bio-IT World Judges, Community Honor Six Outstanding New Products

The Cerebras CS-2 system was awarded a Best of Show award as an innovative solution to important problems facing the life sciences industry.

Argonne Talks AI Accelerators for COVID Research

As the pandemic swept across the world, virtually every research supercomputer lit up to support Covid-19 investigations. But even as the world transformed, the fairly stable status quo of simulation-based scientific computing was itself beginning to more rapidly change with the burgeoning field of AI and AI-specific accelerators, including the Cerebras CS-2.

Cerebras Systems’ dinner plate-sized chips are revolutionizing the field of AI

When the chip is the size of a big pizza pie... that's Cerebras

Accelerating insights in large scale AI projects

Leading research institutes are choosing HPE Superdome Flex as the basis of new supercomputing systems designed to accelerate AI. Both the University of Edinburgh and Pittsburgh Supercomputing Center (PSC) are combining Superdome Flex with Cerebras CS-1, an AI accelerator based on the largest processor in the industry.

Cerebras WSE-2: Der Pizza-Prozessor

2,6 Billionen (!) Transistoren, 850.000 Rechenkerne, 40 GByte On-Chip-Memory, 20 kW Leistungsaufnahme: Die Wafer Scale Engine 2 von Cerebras ist in jeder Hinsicht extrem. Dabei ist der Name Programm: Der 7-nm-Chip belegt nahezu einen kompletten 300-mm-Wafer. Mittlerweile ist der Chip am LRZ in Garching bei München im Einsatz.

Cerebras, TotalEnergies Announce Stencil Algorithm Leap

Cerebras—in collaboration with French multinational TotalEnergies—has announced the development of a massively scalable stencil algorithm: a development made possible by the use of one of Cerebras’ CS-2 systems.

HPE and Cerebras to Install AI Supercomputer at Leibniz Supercomputing Centre

Cerebras' CSoft software update to version 1.2 gives developers using PyTorch and TensorFlow access to their powerful CS-2 systems through Cerebras Cloud.

The World’s Largest Chip Just Received A Major Machine Learning-Flavored Upgrade

Cerebras Systems, makers of the world’s largest chip, has announced that its CS-2 system now supports PyTorch and TensorFlow which will make it possible for researchers to quickly and easily train models with billions of parameters.

How Cerebras CS-2 update stands up to competitors’ offerings

AI hardware and software vendor Cerebras Systems released an updated version of its platform that includes integrated support for the open source TensorFlow and PyTorch machine learning frameworks.

The world’s largest chip just received a major machine learning-flavored upgrade

The Cerebras CS-2 is the world’s fastest AI system and is powered by its Wafer-Scale Engine 2 (WSE-2) CPU. With the release of version 1.2 of the Cerebras Software Platform (CSoft), the CS-2 now supports additional machine learning (opens in new tab) frameworks which will give developers even more choice when it comes to the types of models they want to run.

‘Bigger is better’ is back for hardware – without any obvious benefits

When I first saw an image of the 'wafer-scale engine' from AI hardware startup Cerebras, my mind rejected it. The company's current product is about the size of an iPad and uses 2.6 trillion transistors that contribute to over 850,000 cores.

Wafer-sized Cerebras AI chips play nicer with PyTorch, TensorFlow

Good news for those who like their AI chips big: Cerebras Systems has expanded support for the popular open-source PyTorch and TensorFlow machine-learning frameworks on the Wafer-Scale Engine 2 processors that power its CS-2 system.

How to Be a Fearless Engineer with Cerebras Systems Founder and CEO Andrew Feldman

Innovators don’t see limitations – they see challenges. And that’s exactly what happened when Andrew Feldman and his team at Cerebras Systems were told that it was impossible to build a computer chip that could deliver the same performance as hundreds of graphics processing units.

AI Chip Startups Pull In Funding as They Navigate Supply Constraints

Investors are funneling billions of dollars into startups like Cerebras Systems that make chips designed for artificial-intelligence applications, which have largely avoided the supply-chain constraints and backlogs faced by larger chip makers, startup executives, investors and industry analysts say.

nference Accelerates Self-Supervised Language Model Training With Cerebras CS-2 System

The ability to harness vast amounts of health data using advanced AI technology will lead to new discoveries and insights needed to improve patient care

Cerebras and nference Launch NLP Collaboration

High performance AI compute company Cerebras Systems and nference, an AI-driven health technology company, today announced a collaboration to accelerate natural language processing (NLP) for biomedical research and development by orders of magnitude with a Cerebras CS-2 system installed at the nference headquarters in Cambridge, Mass.

Startups bag billions to fill gaps left by chip world giants

Venture capitalists funneled billions into semiconductor startups like Cerebras Systems in 2021, we're told, targeting designers of machine-learning technologies that fulfill specific or niche needs.

Cerebras CS-2 System to Accelerate NLP for Biomedical R&D

Cerebras Systems, a pioneer in high performance artificial intelligence (AI) compute, and nference, an AI-driven health technology company, today announced a collaboration to accelerate natural language processing (NLP) for biomedical research and development by orders of magnitude with a Cerebras CS-2 system installed at the nference headquarters in Cambridge, Mass.

Most Expensive Process in the World Explained

The Cerebras CS-2 system for AI is a piece of art

Cambrian-AI 2022 Predictions: Expect More Than Just New Chips

Cerebras should be ready to focus on customer success stories in 2022, having built an accessible, if not expensive, system-level solution that has been adopted in HPC and a few enterprises.

AI in 2022: Here are More AI and Tech Predictions from IT Experts

As 2022 begins, the progress and continuing evolution of AI is in full swing across the worlds of industry, manufacturing, retail, banking and finance, healthcare and medicine and an expanding range of other fields.

10 NLP Predictions for 2022

Natural language processing (NLP) has been one of the hottest sectors in AI over the past two years. Will the string of big data breakthroughs continue into 2022? We checked in with industry experts -- including Natalia Vassilieva from Cerebras Systems -- to find out.

Memory Bottlenecks: Overcoming a Common AI Problem

Wafer-scale AI accelerator company Cerebras Systems has devised a memory bottleneck solution at the far end of the scale. At Hot Chips, the company announced MemoryX, a memory extension system for its CS-2 AI accelerator system aimed at high-performance computing and scientific workloads.

THE 10 COOLEST AI CHIPS OF 2021

Cerebras Systems said its Wafer Scale Engine 2 chip is the “largest AI processor ever made,” consisting of 2.6 trillion transistors, 850,000 cores and 40 GB of on-chip memory. The startup said those specifications give the WSE-2 chip a massive advantage over GPU competitors.

IEEE Spectrum’s biggest semiconductor headlines of 2021

Last April, Cerebras Systems topped its original, history-making AI processor with a version built using a more advanced chipmaking technology. The result was a more than doubling of the on-chip memory to an impressive 40 gigabytes, an increase in the number of processor cores from the previous 400,000 to a speech-stopping 850,000, and a mind-boggling boost of 1.4 trillion additional transistors.

Cerebras CS-2 Aids in Fight Against SARS-CoV-2

One company contributing to the fight with artificial intelligence and machine learning is Cerebras Systems. The second iteration of the company’s Wafer-Scale Engine machine, the CS-2 system, plays an important role as part of the AI Testbed at Argonne National Laboratory and has contributed to a multi-agency COVID-19 reproduction study that was nominated as a Gordon Bell Special Prize finalist.

LLNL Establishes AI Innovation Incubator to Advance AI for Applied Science

Lawrence Livermore National Laboratory has established the AI Innovation Incubator (AI3), a collaborative hub aimed at uniting experts in artificial intelligence from LLNL, industry and academia including Cerebras Systems to advance AI for large-scale scientific and commercial applications.

EE Times Weekend: Andrew Feldman: ‘Let Your Passion Be Your Guide’

EE Times interviews Cerebras CEO Andrew Feldman on leadership, personal projects and the biggest threat to society posed by technology today

The Most Important Compute Workloads of a Generation: How Cerebras Systems is Accelerating AI’s Potential

It’s a bird, it’s a plane, it’s the largest AI processor ever made! In this week’s Fish Fry podcast, Andy Hock (Cerebras Systems) joins me to chat about the largest AI processor ever made – the 7 nm wafer scale engine 2, the details of their brain-scale AI training, and how Cerebras Systems is democratizing access to high performance AI computation.

The Most Important Compute Workloads of a Generation: How Cerebras Systems is Accelerating AI’s Potential

It’s a bird, it’s a plane, it’s the largest AI processor ever made! In this week’s Fish Fry podcast, Andy Hock (Cerebras Systems) joins me to chat about the largest AI processor ever made – the 7 nm wafer scale engine 2, the details of their brain-scale AI training, and how Cerebras Systems is democratizing access to high performance AI computation.

Big Data Industry Predictions for 2022

Cerebras Systems VP Andy Hock predicts that, "In 2022, AI will continue to grow as a valuable and critical workload for enterprise organizations across industries. We will see a larger number of teams investing in world-class AI computing to accelerate their research and business..."

Exascale Ambitions

Simon McIntosh-Smith discusses the role of the ExCALIBUR project in ensuring that UK research is at the forefront of HPC -- including a Cerebras CS-1

Companies Powering the New Economy

This year has been marked by continued uncertainty and upheaval of our consumer habits and supply-and-demand balance. The dynamic business landscape has been challenging for even the most successful corporations, let alone for startups that are earlier in their efforts to establish market traction and growth.

Wisdom From The Women Leading The AI Industry, With Natalia Vassilieva of Cerebras

As part of their series about the women leading the Artificial Intelligence industry, Tyler Gallagher interviewed Natalia Vassilieva, Director of Product, Machine Learning at Cerebras Systems.

How AI Is Aiming at the Bad Math of Drug Development

GlaxoSmithKline Plc hopes to double its drug success rate to 20% by using AI, teaming up with partners including DNA testing provider 23andMe Holding Co. and Cerebras Systems Inc., an upstart chipmaker that provides computer systems to crunch very large data sets.

How the Cloud Powers Moore’s Law, and More

Additional innovations such as three-dimensional chip stacking and Cerebras wafer-scale chips may help extend Moore’s law for another decade or two, at least.

Cerebras Systems & G42 join to bring high-performance AI to Middle East

Cerebras Systems and G42 signed a memorandum of understanding (MOU) at GMIS, committing to offer high-performance AI capabilities to the Middle East. G42 will enhance its technology stack with Cerebras’ CS-2 systems to give unrivaled AI compute capabilities to its partners and the broader ecosystem.

Cerebras Systems, G42 To Bring AI Compute Capabilities To The Region

Artificial intelligence (AI) compute solutions provider Cerebras Systems and G42, the UAE-based AI and cloud computing company, have signed a memorandum of understanding (MOU) at GMIS, under which they will bring high performance AI capabilities to the Middle East.

THE 10 HOTTEST SEMICONDUCTOR STARTUP COMPANIES OF 2021

Semiconductor startups like Cerebras Systems are offering novel ways to improve the performance, efficiency, economics and bandwidth of servers and beyond with new takes on CPUs, accelerators and connectivity solutions.

World’s largest computer chip enters Mideast to push AI adoption further

DUBAI: It seems only fitting that the world’s biggest computer chip, developed by US-based Cerebras, be deployed in the Middle East, home to some of the world’s entertaining superlatives — biggest tower, deepest swimming pool, among many other “firsts.”

Cerebras Systems and G42 sign a MoU for artificial intelligence

Cerebras Systems and G42 today announced at GMIS the signing of a memorandum of understanding (MOU) under which they will bring high performance AI capabilities to the Middle East. G42, who manages the region’s largest cloud computing infrastructure, will upgrade its technology stack with Cerebras’ industry-leading CS-2 systems to deliver unparalleled AI compute capabilities to its partners and the broader ecosystem.

Cerebras Systems Named to Fast Company’s Annual List of the World’s Most Innovative Companies for 2021

Creator of the world’s fastest AI supercomputer among top-ranked companies in the Artificial Intelligence category