The Cerebras Scaling Law: Faster Inference Is Smarter AI

June 11, 2025

Blog

Cerebras and Qualcomm Unleash ~10X Inference Performance Boost with Hardware-Aware LLM Training - Cerebras

March 11, 2024

Blog

Cerebras Architecture Deep Dive: First Look Inside the HW/SW Co-Design for Deep Learning [Updated] - Cerebras

May 22, 2023

Blog

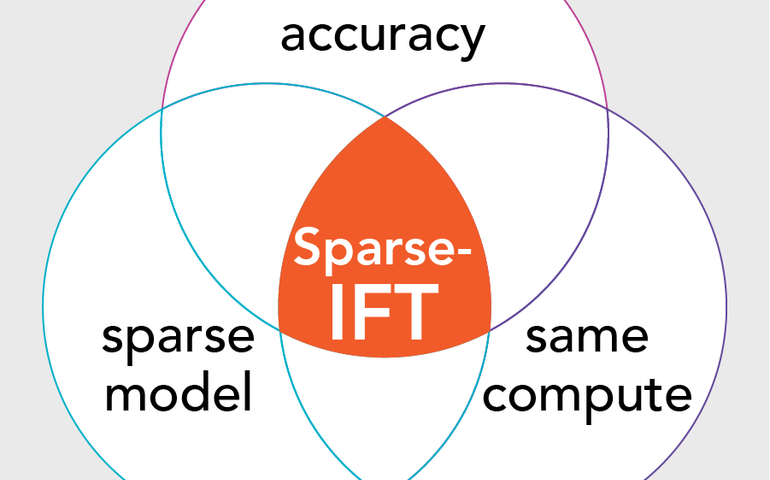

Can Sparsity Make AI Models More Accurate?

March 22, 2023

Blog

Harnessing the Power of Sparsity for Large GPT AI Models

November 28, 2022

Blog